In my previous post I explained the I3 framework and gave a guide on how to use it. In this post I will explain how you can use it to support your planning activities, capturing the best of our current practice, ready for a post-pandemic world.

Interaction Assessment

Let me start by saying that there are a lot of ways to describe teaching and learning activities. There are also a lot of different frameworks and models which take you through a similar process. So why did I invent a new one? I originally designed this approach for another application. It was designed back in the early days of my first company (Ogwen Publishing – a web systems development company) as a way to unpackaged a companies communication and social engagement strategies. As such it was designed to be purposefully high-level and generic as I worked with a range of clients. I have also found that many of the popular teaching and learning frameworks are overly fitted, or unintentionally subject-specific.

Most existing frameworks use specific teaching activity descriptors such as “group work”. This isn’t always particularly useful. For example, group work is still group work whether you do it online or face to face. However, group work (to continue with this example) is typically made up of a number of communication activities.

An interaction assessment abstracts pedagogies into individual channels of communication. It is intentionally reductive to keep it generic and broadly applicable. It can help you to get a good overview of all your teaching and learning processes.

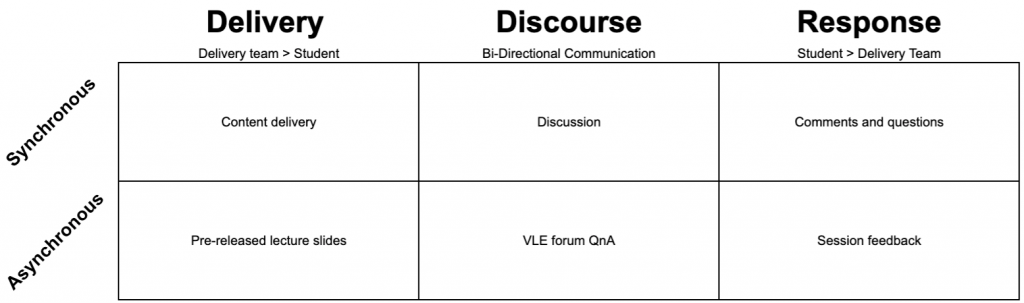

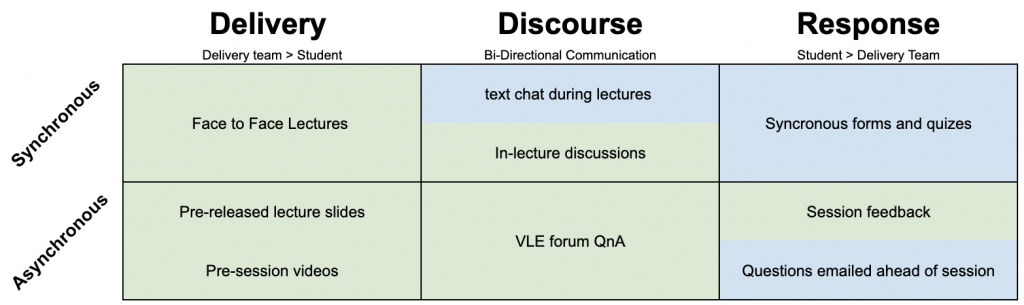

An interaction assessment categorises each element of an activity into one of three communication types. These are Delivery, Discourse, and Response. Origionally I called these downstream, cross-stream, and upstream communications – which work in a commercial setting, but the phrasing doesn’t work as well in education.

- Delivery / Communication channels from the delivery team to the student

- Discourse / Communication that is bi-directional or conversational with no single instigator or recipient.

- Response / Communication channels from the student to the delivery team.

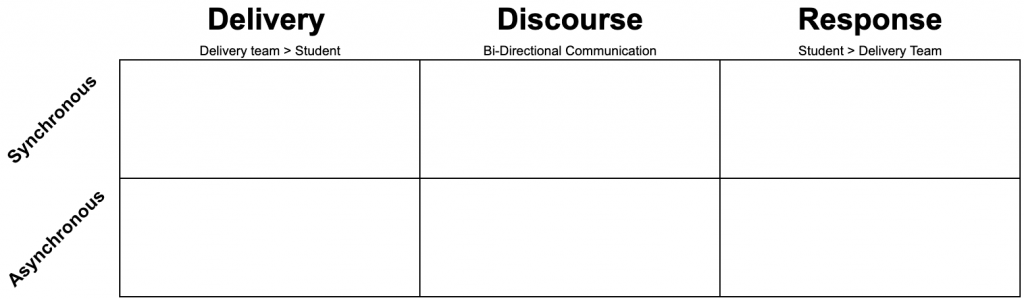

To disassemble a specific teaching practice start by creating a grid. In the columns write the three communication categories. In the rows write synchronous and asynchronous (when I first used this method, we called these online and offline communications).

How does this work in practice?

I have used this method to gain insight into my own teaching practice for a few years. As a tool, it helps me to understand how I am communicating through my teaching, and how my learners are engaging. It is rare that I fully redesign my entire practice, and this helps me to abstract out individual areas that I may want to innovate.

For example, let’s consider some of the typical communications in a typical weekly lecture and enter them into the grid.

Of course, this lecture may look very different to yours, Im not suggesting that these are all the communication channels, or that this represents good/best practice… but it makes for a simple example.

By going through this exercise we can quickly identify what communication channels we are using, and possibly missed opportunities. It also works for all teaching and learning exercise (including assessments). Once you have abstracted a teaching and learning activity into a number of communication processes it is easy to see where you can make modifications within your practice.

Using I3 within an interaction assessment

Now you understand the I3 framework (discussed in my last post) and interaction assessments, how do you use them together? And whats the benefit?

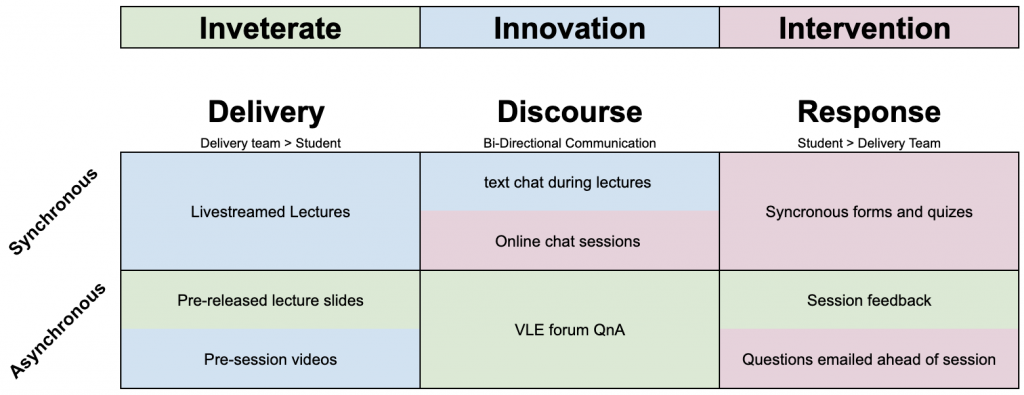

Let’s consider our example lecture again. This year we had to design our modules around the COVID19 restrictions. Although we continued with some practices as normal, we also had to make a number of modifications which we planned over the summer. However, we had to make some additional changes on-the-fly to respond to student feedback, and changes in guidance and circumstance. To use the nomenclature from I3 framework we have a number of inveterate, innovation, and intervention processes.

We can embed this information directly into our interaction assessment. In the following diagram, I have done this with a colour code. It is worth mentioning that the interaction assessment is a meta approach, and it can be applied to a number of different frameworks. Want to use the conversation framework descriptors in an interaction assessment – go ahead!

So, what do we learn from this process? Well, immediately we can identify that two-thirds of the processes this year were new, and a third were interventions. As mentioned in our previous post on the I3 framework, there is significantly more work involved in implementing new processes. This delivery team is probably exhausted from the sheer number of plates they have kept spinning this year. We can also assume that having these many processes in an intervention cycle is not sustainable. Furthermore, the number of interventions will have diverted attention away from the innovations… as mentioned in the previous post, a team can only innovate if they have the time to both plan AND evaluate.

Prioritising and planning for next year

Now we have our interaction assessment, we need to decide what we will do with our processes in planning for next year.

A priority should be to move as many processes from more chaotic spaces through to more ordered ones. So taking any successful innovations and making them Inveterate, and taking successful Interventions and (taking time to plan) move them into the innovation space. Of course, there are processes that we may wish to abandon or revert to a previous process too.

To do this, I take all my processes from the interaction assessment and I run each through a prioritisation process of Strategic, Evaluative, and Pragmatic (SEP) interest.

- Strategic / Are there any processes which will not be strategically viable in the next iteration? For example, during the pandemic, you may have relied on live-streamed lectures, but the institutional priority next year maybe to move back to the classroom. If something is counter to strategy then the process can be abandoned/reverted at this stage.

- Evaluative / In the previous post we noted that the three categories in I3 have a different burden of evaluation. Inveterate processes are viewed longitudinally, and one evaluation shouldn’t be enough to either validate or invalidate a process. Especially during the exceptional times that we live in. Innovations should have been planned with structured evaluations. If these have gone exceptionally well you may choose to move a process from Innovation to the Inveterate space. If they haven’t you may choose to run them through another innovation cycle, or revert to a previous process. Interventions have the lowest burden of evaluation. You implemented them as a reaction to a specific event, without time for proper planning. Use the data you have to decide if you want to keep these and move them into the innovation space, or if they have served their purpose you can abandon/revet the processes – to use a metaphor, if you have put out a fire, you don’t need to keep throwing water on the ashes.

- Pragmatic / The final process of evaluation is pragmatism. As mentioned previously, this delivery team have spent over half their processes in relative chaos – this is unsustainable long term. Part of a pragmatic process is to decide if you physically have the capacity to keep all your innovations and interventions operational. If not, then sometimes it is worth making the difficult decision to shelve an idea and focus your efforts where you can have the most impact.

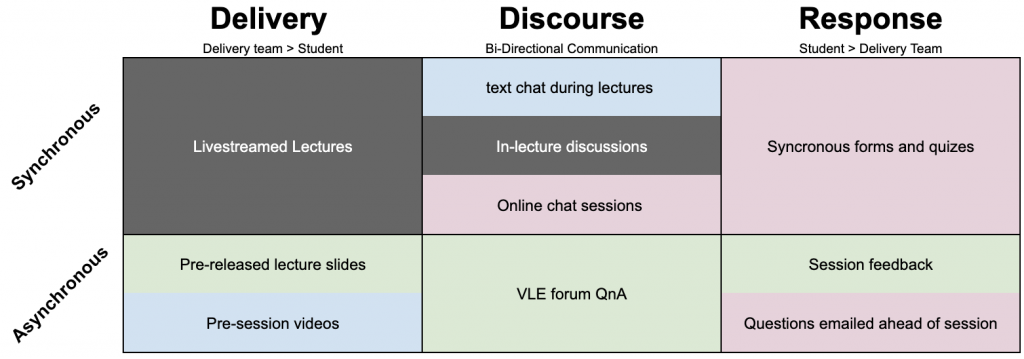

For purpose of example, let’s assume we have gone through this process. starting with strategy. At the University of Imagination, the strategic move will be to move lectures back on campus next year. So, strategically we will probably need to revert our live-streamed lectures. We could look at the evaluations, and decide if it is worth keeping this process but applying it elsewhere… but for the sake of simplicity, this is the first innovation that we will be reverting. Furthermore, moving back into the classroom allows us to bring back a debate activity that we used to do.

All our other processes still align with the school/institutions strategy, so we will keep them in the pool for now.

Now we look at our evaluations, starting with inveterate processes (green). In this case, we note that some things have actually improved, students are using the VLE more and accessing the pre-released lecture slides earlier. However, as the students are online more this is possibly just an artefact of the pandemic, and not enough to warrant celebration just yet – however, we commit to looking at this closer next year.

Next, we look at the innovation processes (blue). in this case, we planned a detailed evaluation and have some data to work from. We find that the text chat in MSTeams was very popular, however, we don’t know how to continue with this process if we are abandoning the live-streamed lectures. We put this into another innovation cycle so we can replan and adapt the process for next year. The pre-session videos were also well received, we have a library of recorded content, and there is no impact from the change of strategy – so we con confidently move this into an inveterate cycle.

Finally, we look at the interventions (pink). In this case we responded to a challenge and have very little data to work with so we are largely working from intuition, our experience as educators, and student engagement (more about this in the next section). In this case, all three processes seemed to go as well as can be expected, so we can take what we have learned, and plan all three as innovations next cycle.

In this case we didnt decide to

Brilliant, we have moved most of our process along the chain, stabilising our practice, and creating space to develop key innovations. However, we now have four innovations to focus on… we may still decide that this is spreading ourselves too thin (especially when we consider the evaluative burden that innovations come with). In this case the delivery team has decided that it is too much, and (despite the practice showing promise) they have decided to shelve the “online chat sessions” for next year. They may decide to revisit this area in the future if they are able to move some more processes over into the inveterate space (or if they are able to free up time elsewhere – conversations with management can be useful here).

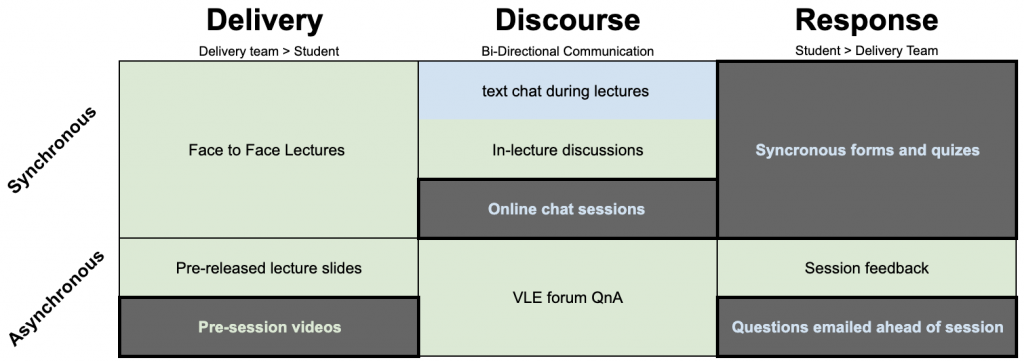

This leaves them with the following plan for next year.

By going through this process we have managed to

- Determine with areas of our new practice we are going to keep.

- Move our practice from more chaotic spaces into more ordered and strategic spaces.

- Clearly defined were we are focusing our creative energies, and identified where we will be innovating (by association, where we may need support).

- Save the delivery team significant time. The more chaotic the process, the more time it takes to manage it. The majority of our processes are now inveterate, and we have clearly defined our innovation spaces.

This is a small example; one weekly lecture. Furthermore, in this example, the fictional team actually held onto a lot of their old practice. This often isn’t the case! However, even with this simple example, we have helped this team to make sense of how to consulate their efforts, and move away from “fighting fires”. Hopefully, it is clear how this can apply to a variety of contexts, and help teams to make sense of their activities – if not, get in touch!

Student Engagement

In any evaluation piece it is important that students are engaged, both as stakeholders in the process, but also as experts in their own right. Students experience a range of teaching processes which gives them an invaluable perspective.

So how does I3 align with student engagement?

Inveterate processes (due to their ongoing nature) are will probably be evaluated largely by institutional structures – the module evaluation, which engages students passively. However, module evaluations may not have the fidelity to capture detail at the process level. Consider how you are talking to your students, feel confident to ask questions about specific processes directly to ensure that your standard practices continue to be fit for purpose.

Innovation processes should be written with evaluation in mind – honestly, this is one of the key takeaways from past two blog posts. Student feedback, surveys, focus groups, etc should almost always be included as part of this evaluation piece. Students represent half of a teaching and learning relationship, they are either the recipient or instigator of a communication process, excluding them would severely limit an evaluation.

Finally, interventions will always suffer from weaker data due to their “act first, plan later” nature. As I mentioned earlier in this post, often our sense, experience, and intuition guide us regarding ascertaining how useful/valuable these ideas have been. However, we can add a lot of value to this process by engaging with students and simply asking the question. An intervention cycle is a short-term, rapid cycle of react, analyse and modify. The more student data we can add to the analysis step can help us to make valuable decisions along the way – improving the interventions chance of success, and helping us to quickly transition it to an intervention.

I am starting to think that I should write a whole blog on engaging students in practice evaluation…. another time maybe.

I will close with this point. When I go through a SEP, I almost always use students to drive pragmatic decisions about what I keep, and what I shelve (or abandon). I have even taken students through the whole SEP process in the past. It gives them a useful peak behind the curtain of practice, and I benefit from a valuable perspective.

Final Thoughts

I truly hope you have found these posts useful, and please let me know if you are planning on using I3, Interaction Assessments, or SEP prioritisation in your future planning activities. Of course, there are many ways to solve a problem, and my approach is just one framework. However, please let me know what you think in the comments.

No responses yet